How to Optimize Your VFX Pipeline for Efficient File Management

A VFX pipeline is the end-to-end workflow that visual effects studios use to manage assets, shots, and renders from initial plate delivery through final composite output. Managing the sheer volume of data, often involving 50+ artists and hundreds of iterations per shot, requires more than just creative software. It demands a solid file management strategy. This guide covers how to improve your pipeline for speed, security, and remote collaboration.

What is a VFX Pipeline?

A VFX pipeline is the system of hardware, software, and people that moves digital assets through every stage of production. Without one, a studio is just a room of artists working in silos. A well-designed pipeline keeps data flowing from one department to the next, cutting errors and freeing up time for creative work.

A single VFX shot may go through 100+ iterations before final approval. Each stage generates massive amounts of data.

Pre-Production

This phase lays the groundwork. It involves storyboarding, concept art, and pre-visualization (previs). The files here are relatively small (images, scripts, and low-poly 3D models), but they establish the metadata and naming conventions that will govern the entire project.

Production

This is where the bulk of the work happens. Assets are created (modeling, texturing, rigging) and shots are animated. This stage generates large 3D files and high-resolution textures, often reaching gigabytes per asset.

Post-Production

The final assembly. This includes lighting, rendering, and compositing. The output is often EXR image sequences where a single frame can be 50MB+, and a 5-second shot can exceed 10GB. Efficient storage and retrieval are critical here.

The Hidden Bottleneck: File Transfer

Most technical guides focus on software interoperability, like getting Maya to talk to Nuke. But the real bottleneck in a distributed pipeline is often just moving files around. VFX projects frequently involve 50+ artists across multiple locations. Getting assets between local servers, cloud storage, and freelance workstations is a constant logistical challenge.

Standard internet protocols often fail under the weight of VFX data. Email has a strict 25MB limit, and standard FTP can be slow and unreliable for multi-gigabyte transfers. When a deadline is looming, waiting four hours for a render to upload is not an option. This is why secure file transfer solutions are becoming a standard part of the pipeline infrastructure.

How to Standardize File Management

Disorganized files lead to broken reference links and lost work. Keeping your pipeline running means enforcing strict standards for how files are named and stored.

Naming Conventions

Adopt a rigid naming convention that is machine-readable. A common format is Project_Seq_Shot_Dept_Task_Ver_Frame.ext. For example, Mov_Sc01_Sh020_Lgt_Main_v003.1001.exr. This allows scripts and asset management software to automatically parse and route files.

Folder Structure

Mirror your pipeline steps in your folder structure. A typical hierarchy might look like Project > Sequence > Shot > Department > Version. This predictability allows artists to find assets without asking for paths, and it enables automation tools to function correctly.

Version Control

Never overwrite a file. Always increment version numbers (v001, v002). This provides a safety net, allowing you to roll back to previous iterations if a client rejects a change or a file becomes corrupt.

Sharing Assets with Remote Teams and Clients

Most VFX studios today are decentralized. Modelers in London, animators in Los Angeles, a render farm in the cloud. Connecting these locations requires a transfer layer that's fast, secure, and easy to use.

Traditional cloud storage can be clunky for high-volume VFX workflows. Syncing terabytes of raw footage to every artist's local drive is impractical. Studios need a hybrid approach: files stored centrally (or in the cloud) with only necessary assets pulled down on demand.

For client deliveries, security and presentation matter. A raw folder link looks unprofessional. A branded portal lets you control the experience and track when files are downloaded.

Security Best Practices for VFX

Leaked footage can ruin a movie release and destroy a studio's reputation. Security is not optional. Major studios require adherence to TPN (Trusted Partner Network) guidelines, the industry standard for content security.

Your file sharing system should support granular permissions. An animator working on Scene 5 shouldn't have access to the script for the ending or the assets for Scene 10. Use "least privilege" access controls.

All transfers should be encrypted in transit and at rest. While Fast.io is not TPN certified, it offers strong security features like banking-grade encryption and detailed audit logs, both essential for a secure workflow. Remote video editing workflows benefit from these protections when sharing dailies and pre-release content.

Automating the Ingest and Delivery Process

Manual file management is prone to human error. A mature pipeline uses automation for repetitive tasks. Python scripting is the industry standard, linking tools like ShotGrid or ftrack to your storage backend.

When a compositor publishes a version, the pipeline should automatically:

- Save the script to the correct versioned folder.

- Render a low-res proxy for review.

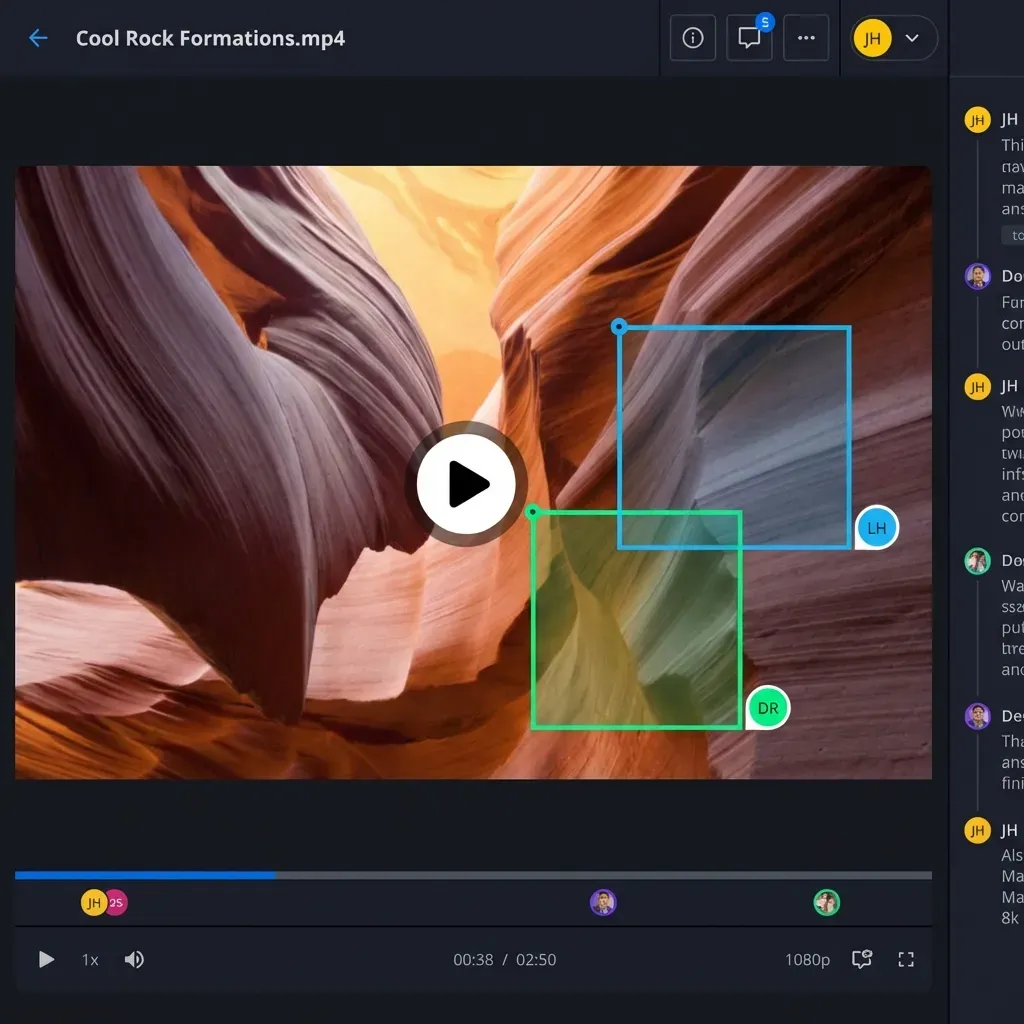

- Upload that proxy to the review platform.

- Notify the supervisor.

When these steps run automatically, artists can focus on the art, not the admin work. Integrating media asset management software can speed this up by tagging and indexing files as they enter the system.

Frequently Asked Questions

What is the difference between a pipeline and a workflow?

A workflow is the series of steps an individual artist takes to complete a task (e.g., modeling to texturing). A pipeline is the larger system that connects all these workflows. It includes hardware, software, scripts, and file management protocols that tie everything together into a single production line.

How do you handle large file transfers in VFX?

Standard internet tools often fail for massive files like 4K plates or EXR sequences. Studios use accelerated file transfer solutions with specialized protocols to get the most out of available bandwidth, or ship physical hard drives for initial large data dumps. Splitting sequences into zip files is generally discouraged because it slows down access. Purpose-built transfer tools are the better option.

What software is typically used in a VFX pipeline?

A standard pipeline might use Maya or Blender for 3D, Substance for texturing, Nuke for compositing, and ShotGrid (formerly Shotgun) or ftrack for project management. The 'glue' holding these together is usually custom Python scripts and a reliable file system.

Speed Up Your VFX Deliveries

Cut transfer wait times with Fast.io. Share 4K footage and EXR sequences with clients and remote artists in minutes, not hours.